Empowering Your Data Journey from Extraction to Transformation to Loading

Our ETL Services Includes

Data Extraction

We seamlessly extract data from various sources, including databases, applications, and APIs, ensuring that all relevant information is captured.

Data Transformation

Data Loading

Real-Time ETL

ETL Optimization

Platform Deployment

Efficient Deployment

We ensure a smooth deployment of the new platform, making sure all functionalities work as expected.

User Training

To maximize adoption, we offer user training to ensure your team is comfortable with the new platform.

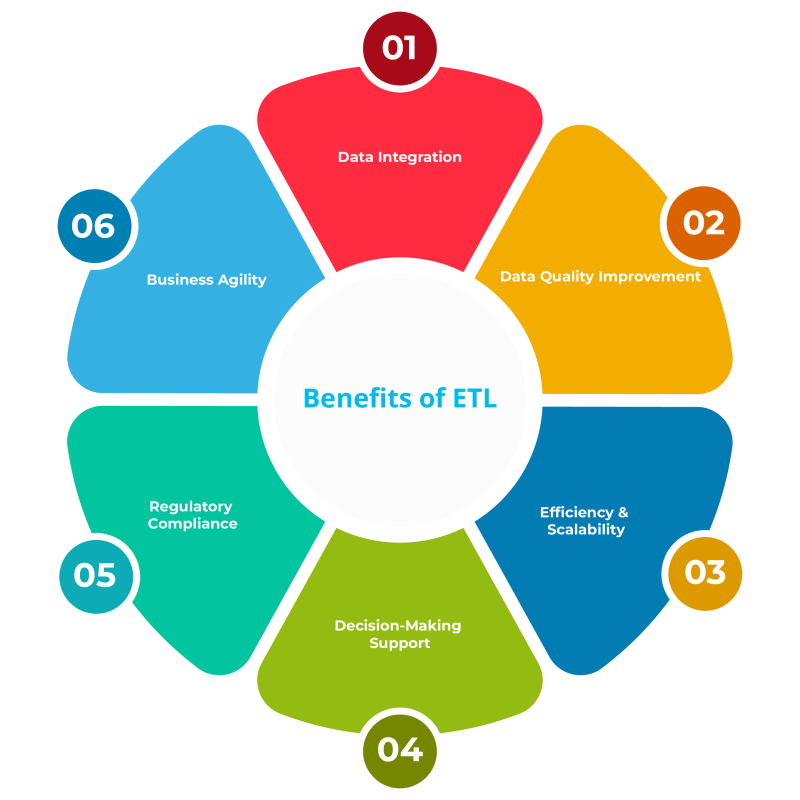

How to maximise the benefit from ETL?

Data Integration

- ETL enables the integration of data from multiple sources such as databases, applications, and systems into a single, unified view. This integration facilitates comprehensive analysis and reporting by providing a consistent and coherent dataset.

Data Quality Improvement

-

During the transformation phase, ETL processes can cleanse and standardize data, correct errors, remove duplicates, and enrich datasets with additional information. This improves data quality, ensuring that the data used for analysis and decision-making is accurate, consistent, and reliable.

Efficiency and Scalability

- ETL processes automate the extraction, transformation, and loading of data, reducing the need for manual intervention and repetitive tasks. This automation improves operational efficiency, saves time, and allows organizations to handle large volumes of data effectively, scaling their data processing capabilities as needed.

Decision-Making Support

- By providing timely access to integrated and high-quality data, ETL enables better decision-making across the organization. Analysts and decision-makers can rely on accurate and comprehensive data insights to identify trends, patterns, and opportunities, leading to more informed and strategic decisions.

How it Works

01. Extraction

-

Identifying Data Sources: Determine the sources from which data will be extracted, such as databases, files, APIs, or streaming sources.

- Data Retrieval: Extract data from the identified sources using various methods such as batch extraction, incremental extraction, or real-time streaming.

- Data Profiling : Analyze the extracted data to understand its structure, quality, and characteristics, identifying any inconsistencies, errors, or missing values.

03. Loading

-

Target Schema Design : Design the schema or structure of the target database or data warehouse where the transformed data will be loaded.

-

Data Mapping : Map the transformed data attributes to the corresponding fields in the target schema, ensuring alignment and consistency.

-

Data Loading : Load the transformed data into the target database or data warehouse using appropriate methods such as bulk loading, incremental loading, or streaming ingestion.

02. Transformation

-

Data Cleaning : Cleanse the extracted data by removing duplicates, correcting errors, standardizing formats, and handling missing values.

-

Data Enrichment : Enhance the extracted data by adding additional information, such as calculated fields, derived attributes, or data from external sources.

-

Data Aggregation : Aggregate and summarize the data as needed for analysis, such as grouping records, calculating totals, or generating statistical measures.

04. Workflow Orchestration

-

ETL Workflow Design : Design the overall ETL workflow, defining the sequence of extraction, transformation, and loading tasks, as well as dependencies and error handling.

-

Dependency Management : Manage dependencies between different stages of the ETL process to ensure data integrity and consistency.

-

Monitoring and Maintenance : Implement monitoring tools and processes to track the performance and health of the ETL pipeline, including error logging, data lineage tracking, and performance optimization.

01. Strategy

- Clarification of the stakeholders’ vision and objectives

- Reviewing the environment and existing systems

- Measuring current capability and scalability

- Creating a risk management framework.

02. Discovery phase

- Defining client’s business needs

- Analysis of existing reports and ML models

- Review and documentation of existing data sources, and existing data connectors

- Estimation of the budget for the project and team composition.

- Data quality analysis

- Detailed analysis of metrics

- Logical design of data warehouse

- Logical design of ETL architecture

- Proposing several solutions with different tech stacks

- Building a prototype.

03. Development

- Physical design of databases and schemas

- Integration of data sources

- Development of ETL routines

- Data profiling

- Loading historical data into data warehouse

- Implementing data quality checks

- Data automation tuning

- Achieving DWH stability.

04. Ongoing support

- Fixing issues within the SLA

- Lowering storage and processing costs

- Small enhancement

- Supervision of systems

- Ongoing cost optimization

- Product support and fault elimination.

Why Choose Complere

Infosystem for

ETL Services

Expertise

Our team consists of experienced ETL specialists who bring deep knowledge and a proven track record in designing and implementing ETL solutions that meet your specific needs.

Tailored Solutions

We understand that each organization's data requirements are unique. Our ETL services are customized to address your specific data challenges, regardless of complexity.

Cutting-Edge Technology

We stay at the forefront of ETL technology, utilizing the latest tools and methodologies to ensure seamless data flow, accuracy, and performance.