Introduction

Today almost all businesses depend on data. This is the only power of data that allows business owners to make informed decisions. But data management is not an easy task, especially when you need to handle a large amount of data. Simplifying your data processing pipelines works efficiently. This content will guide you with enough knowledge about how to build data pipelines with data bricks. So read the full content and enjoy the benefits of making a well-structured data pipeline with data bricks.

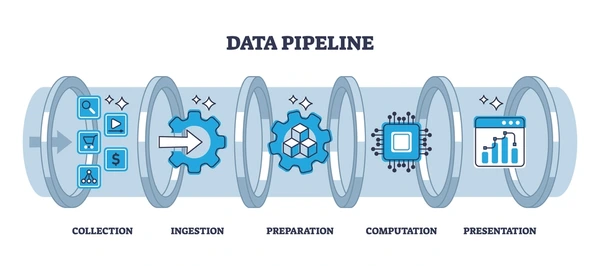

What is a Data Pipeline?

The stepwise process of extraction and loading of data is called data pipeline. In the modern technological culture, every business owner knows what a data pipeline is. This process includes a set of data processing elements, and all elements are inter-connected. The result of an element works as the input for another element. So, they perform in a predefined sequence to avoid any possibility of mistakes. The main purpose behind using data pipeline process with data bricks is to make your data flow smoothly, securely, and efficiently.

How to Build Data Pipeline for Streamlining Data with Data Bricks

Before knowing the intelligent and smart steps of building data pipeline with data bricks you must know what data bricks means? It is a great tool that simplifies your data pipeline building process. Also, this tool allows you to easily focus on what is more important. Data pipelines prepare a systematic process where your business data flows from one place to another for further processing. See how you can also build data pipelines step-by-step for streamlining data with data bricks:

Step 1 – Define Your Objectives : Before understanding the technical aspects, set up your goals and objectives. Identify what kind of data you have right now, and what do you want to achieve with your available data? Knowing your objectives will guide you in designing an effective pipeline with data bricks.

Step 2 – Extract Data : The next step after setting your objective is to extract data. At this stage you need to collect data from different sources. These sources may include databases, applications, APIs, and flat files. Databricks help you by providing connectors and libraries. With the help of these features, you can collect data from different sources easily. Once you have extracted the data your next step will be its transformation.

Step 3 – Data Transformation: At this step you can clean, nourish and mold your data. All these efforts make your data usable and meaningful. Databricks provide many advanced tools and libraries that can make your data transformation process much easier, faster and in an appropriate manner. Data transformation with the help of data bricks saves you from data mess.

Step 4 – Data Loading : Now at the fourth step your data is transformed, and you need to load this data at your preferred location. Generally, you load transformed data to a data lake or data warehouse. Databricks is a popular solution for flawless data warehousing solutions. Its Amazon S3, Azure data lake storage and more options allow you the most secure data storage solutions. After transforming the data, it is time to load it into your preferred location, typically a data warehouse or a data lake. Databricks integrates flawlessly with popular storage solutions like Amazon S3, Azure Data Lake Storage, and more.

Step 5 – Data Processing and Analysis : Your transformed data is safely stored, and at fifth step data bricks increases your processing capabilities. Its efficient analytics tools and machine learning abilities support your advanced data processing and analysis. Without processing and analyzing your data you cannot achieve valuable information from your transformed and stored data. So just like other steps this step is equally important.

Step 6 – Monitoring and Maintenance : Once you have built the pipeline and processed data stepwise it is not enough for the desired results. For the consistency in data pipelinebenefits you need to be consistent for monitoring and maintenance of data pipeline. This is not a one-time task you need to be punctual for regular monitoring. Monitoring and alerting features from data bricks will manage this task on your behalf. So, try data bricks and stay free from the worries about the safety, accuracy, reliability, and performance of your data.

Benefits of Building a Data Pipeline

By building data pipeline below are some useful advantages that you can get for your data quality, better processing, and more.

- Improved data quality

- Efficiency and automation

- Real-time Information and decision-making ability

- Scalability

- Cost savings

- Data security and compliance

- Competitive advantages, and more

Conclusion

Data pipeline building with data bricks works great to give you the desired results in favor of your business growth. By simplifying your data processing, analyzing, and other tasks, you save time and investments. Also, you get the best quality data with real-time information to make informed decisions. So, improve your data quality, efficiency, and scalability by building data pipelines with data bricks.

Click here for the best-in-class structured data pipeline with data bricks, or request a free demo today.